I haven’t written a blog in a while, been busy with the new job at Tanium, but I did write this script recently, and thought I would share, in case anyone else found it interesting. Share it forwards.

Problem

Been working on solutions to upgrade Windows 7 to Windows 10 using Tanium as the delivery platform (it’s pretty awesome if I do say so my self). But as with all solutions, I need to test the system with some end to end tests.

As with most of my OS Deployment work, the Code was easy, the testing is HARD!

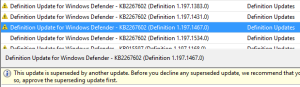

So I needed to create some Windows 7 Images with the latest Updates. MDT to the rescue! I created A MDT Deployment Share (thanks Ashish ;^), then created a Media Share to contain each Task Sequence. With some fancy CustomSettings.ini work and some PowerShell glue logic, I can now re-create the latest Windows 7 SP1 patched VHD and/or WIM file at moment’s notice.

Solution

First of all, you need a MDT Deployment Share, with a standard Build and Capture Task Sequence. A Build and Capture Task Sequence is just the standard Client.xml task sequence but we’ll override it to capture the image at the end.

In my case, I decided NOT to use MDT to capture the image into a WIM file at the end of the Task Sequence. Instead, I just have MDT perform the Sysprep and shut down. Then I can use PowerShell on the Host to perform the conversion from VHDX to WIM.

And when I say Host, I mean that all of my reference Images are built using Hyper-V, that way I don’t have any excess OEM driver junk, and I can spin up the process at any time.

In order to fully automate the process, for each MDT “Media” entry. I add the following line into the BootStrap.ini file:

SkipBDDWelcome=YES

and the following lines into my CustomSettings.ini file:

SKIPWIZARD=YES ; Skip Starting Wizards SKIPFINALSUMMARY=YES ; Skip Closing Wizards ComputerName=* ; Auto-Generate a random Computer Name DoCapture=SYSPREP ; Run SysPrep, but don't capture the WIM. FINISHACTION=SHUTDOWN ; Just Shutdown AdminPassword=P@ssw0rd ; Any Password TASKSEQUENCEID=ICS001 ; The ID for your TaskSequence (Upper Case)

Now it’s just a matter of building the LitetouchMedia.iso image, mounting to a Hyper-V Virtual Machine, and capturing the results.

Orchestrator

What I present here is the Powershell script used to orchestrate the creation of a VHDX file from a MDT Litetouch Media Build.

- The script will prompt for the location of your MDT Deployment Share. Or you can pass in as a command line argument.

- The script will open up the Deployment Share and enumerate through all Media Entries, Prompting you to select which one to use.

- For each Media Entry selected, the script will

- Force MDT to update the Media build (just to be sure)

- Create a New Virtual Machine (and blow away the old one)

- Create a New VHD file, and Mount into the Virtual Machine

- Mount the LitetouchMedia.iso file into the Virtual Machine

- Start the VM

- The script will wait for MDT to auto generate the build.

- Once Done, for each Media Entry Selected, the script will

- Dismount the VHDx

- Create a WIM file (Compression Type none)

- Auto Generate a cleaned VHDx file

Code

The code shows how to use Powershell to:

- Connect to an existing MDT Deployment Share

- Extract out Media information, and rebuild Media

- How to create a Virtual Machine and assign resources

- How to monitor a Virtual Machine

- How to capture and apply WIM images to VHDx virtual Disks

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| #Requires -RunAsAdministrator | |

| <# | |

| .Synopsis | |

| Auto create a VM from your MDT Deployment Media | |

| .DESCRIPTION | |

| Given an MDT Litetouch Deployment Share, this script will enumerate | |

| through all "Offline Media" shares, allow you to select one or more, | |

| and then auto-update and auto-create the Virtual Machine. | |

| Ideal to create base reference images (like Windows7). | |

| .NOTES | |

| IN Addition to the default settings for your CustomSettings.ini file, | |

| you should also have the following defined for each MEdia Share: | |

| SKIPWIZARD=YES ; Skip Starting Wizards | |

| SKIPFINALSUMMARY=YES ; Skip Closing Wizards | |

| ComputerName=* ; AUto-Generate a random computername | |

| DoCapture=SYSPREP ; Run SysPrep, but don't capture the WIM. | |

| FINISHACTION=SHUTDOWN ; Just Shutdown | |

| AdminPassword=P@ssw0rd ; Any Password | |

| TASKSEQUENCEID=ICS001 ; The ID for your TaskSequence (allCaps) | |

| Also requires https://github.com/keithga/DeploySharedLibrary powershell library | |

| #> | |

| [cmdletbinding()] | |

| param( | |

| [Parameter(Mandatory=$true)] | |

| [string] $DeploymentShare = 'G:\Projects\DeploymentShares\DeploymentShare.Win7SP1', | |

| [int] $VMGeneration = 1, | |

| [int64] $MemoryStartupBytes = 4GB, | |

| [int64] $NewVHDSizeBytes = 120GB, | |

| [version]$VMVersion = '5.0.0.0', | |

| [int] $ProcessorCount = 4, | |

| [string] $ImageName = 'Windows 7 SP1', | |

| $VMSwitch, | |

| [switch] $SkipMediaRebuild | |

| ) | |

| Start-Transcript | |

| #region Initialize | |

| if ( -not ( get-command 'Convert-WIMtoVHD' ) ) { throw 'Missing https://github.com/keithga/DeploySharedLibrary' } | |

| # On most of my machines, at least one switch will be external to the internet. | |

| if ( -not $VMSwitch ) { $VMSwitch = get-vmswitch -SwitchType External | ? Name -NotLike 'Hyd-CorpNet' | Select-object -first 1 -ExpandProperty Name } | |

| if ( -not $VMSwitch ) { throw "missing Virtual Switch" } | |

| write-verbose $VHDPath | |

| write-verbose $VMSwitch | |

| #endregion | |

| #region Open MDT Deployment Share | |

| $MDTInstall = get-itemproperty 'HKLM:\SOFTWARE\Microsoft\Deployment 4' | % Install_dir | |

| if ( -not ( test-path "$MDTInstall\Bin\microsoftDeploymentToolkit.psd1" ) ) { throw "Missing MDT" } | |

| import-module -force "C:\Program Files\Microsoft Deployment Toolkit\Bin\microsoftDeploymentToolkit.psd1" -ErrorAction SilentlyContinue -Verbose:$false | |

| new-PSDrive -Name "DS001" -PSProvider "MDTProvider" -Root $DeploymentShare -Description "MDT Deployment Share" -Verbose -Scope script | out-string | write-verbose | |

| $OfflineMedias = dir DS001:\Media | select-object -Property * | Out-GridView -OutputMode Multiple | |

| $OfflineMedias | out-string | Write-Verbose | |

| #endregion | |

| #region Create a VM for each Offline Media Entry and Start | |

| foreach ( $Media in $OfflineMedias ) { | |

| $Media | out-string | write-verbose | |

| $VMName = split-path $Media.Root -Leaf | |

| get-vm $VMName -ErrorAction SilentlyContinue | stop-vm -TurnOff -Force -ErrorAction SilentlyContinue | |

| get-vm $VMName -ErrorAction SilentlyContinue | Remove-VM -Force | |

| $VHDPath = join-path ((get-vmhost).VirtualHardDiskPath) "$($VMName).vhdx" | |

| remove-item $VHDPath -ErrorAction SilentlyContinue -Force | out-null | |

| $ISOPath = "$($media.root)\$($Media.ISOName)" | |

| if (-not $SkipMediaRebuild) { | |

| write-verbose "Update Media $ISOPath" | |

| Update-MDTMedia $Media.PSPath.Substring($Media.PSProvider.ToString().length+2) | |

| } | |

| $NewVMHash = @{ | |

| Name = $VMName | |

| MemoryStartupBytes = $MemoryStartupBytes | |

| SwitchName = $VMSwitch | |

| Generation = $VMGeneration | |

| Version = $VMVersion | |

| NewVHDSizeBytes = $NewVHDSizeBytes | |

| NewVHDPath = $VHDPath | |

| } | |

| New-VM @NewVMHash -Force | |

| Add-VMDvdDrive -VMName $VMName -Path $ISOpath | |

| set-vm -Name $VMName -ProcessorCount $ProcessorCount | |

| start-vm -Name $VMName | |

| } | |

| #endregion | |

| #region Wait for process to finish, and extract VHDX | |

| foreach ( $Media in $OfflineMedias ) { | |

| $VMName = split-path $Media.Root -Leaf | |

| [datetime]::Now | write-verbose | |

| get-vm -vm $VMName <# -ComputerName $CaptureMachine #> | out-string | write-verbose | |

| while ( $x = get-vm -vm $VMName | where state -ne off ) { write-progress "$($x.Name) – Uptime: $($X.Uptime)" ; start-sleep 1 } | |

| $x | out-string | write-verbose | |

| [datetime]::Now | write-verbose | |

| start-sleep -Seconds 10 | |

| $VHDPath = join-path ((get-vmhost).VirtualHardDiskPath) "$($VMName).vhdx" | |

| dismount-vhd -path $VHDPath -ErrorAction SilentlyContinue | |

| $WIMPath = join-path ((get-vmhost).VirtualHardDiskPath) "$($VMName).WIM" | |

| write-verbose "Convert-VHDToWIM -ImagePath '$WIMPath' -VHDFile '$VHDPath' -Name '$ImageName' -CompressionType None -Turbo -Force" | |

| Convert-VHDtoWIM -ImagePath $WIMPath -VHDFile $VHDPath -Name $ImageName -CompressionType None -Turbo -Force | |

| write-verbose "Convert-WIMtoVHD -ImagePath $WIMPath -VHDFile '$($VHDPath).Compressed.vhdx' -Name $ImageName -Generation $VMGeneration -SizeBytes $NewVHDSizeBytes -Turbo -Force" | |

| Convert-WIMtoVHD -ImagePath $WIMPath -VHDFile "$($VHDPath).Compressed.vhdx" -Name $ImageName -Generation $VMGeneration -SizeBytes $NewVHDSizeBytes -Turbo -Force | |

| } | |

| #endregion |

Notes

I’ve been struggling with how to create a MDT VHDx file with the smallest possible size. I tried tools like Optimize-Drive and sDelete.exe to clear out as much space as possible, but I’ve been disappointed with the results. So here I’m using a technique to Capture the VHDx file as a Volume to a WIM file (uncompressed for speed), and the apply the Capture back to a new VHDx file. That should ensure that no deleted files are transferred. Overall results are good:

Before: 19.5 GB VHDx file --> 7.4 GB compressed zip After: 13.5 GB VHDx file --> 5.6 GB compressed zip

Links

Gist: https://gist.github.com/keithga/21007d2aeb310a57f58392dfa0bdfcc2

https://wordpress.com/read/feeds/26139167/posts/2120718261

https://community.tanium.com/s/article/How-to-execute-a-Windows-10-upgrade-with-Tanium-Deploy-Setup

https://community.tanium.com/s/article/How-to-execute-a-Windows-10-upgrade-with-Tanium-Deploy-Setup